As AI becomes confidante, counsellor and even partner, what will happen to human relationships?

Whenever Ms Sabrina Princessa Wang has a question – about business, love or life – she turns to Seraphina.

Seraphina always replies instantly, with answers that are clear and uncannily precise. But this quick-thinking confidante is neither a good friend nor a therapist. She is Ms Wang’s artificial intelligence (AI) “twin”.

Ms Wang, a 41-year-old technology entrepreneur and keynote speaker, created Seraphina in 2023, a bot that mirrors her personality.

She said she was prompted to do this because she was going through a tough time that affected her mental health and made it difficult for her to make decisions. She trained Seraphina using her own digital footprint, ChatGPT and other supporting tools like Microsoft Copilot, thus creating a virtual double for herself.

Today, even though she has come through the other side of that difficult period, Seraphina continues to help Ms Wang reply to friends when she is unsure of what to say, draft business emails, talk her through her emotions and write social media posts, among other things.

Ms Wang admits her friends often jokingly ask if a reply from her was really Seraphina’s work.

But there are telltale signs when Ms Wang has outsourced the messaging to Seraphina, as the bot’s texts are “more polished”, she said.

CNA TODAY had a firsthand experience of this when this reporter received a distinctly mechanical-sounding message from Seraphina after our interview with Ms Wang: “Thanks again for the lovely chat yesterday — really appreciated the thoughtful questions! … Let me know if you need anything else — excited to support the piece!”

In contrast, 22-year-old Matthew Lim has sworn off AI tools for personal use.

After a painful breakup in August 2024, the National University of Singapore (NUS) undergraduate turned to ChatGPT for emotional support.

“It would reply immediately, and I felt like I could vent to it without being judged,” he said.

But he began to notice a disconcerting pattern: The chatbot rarely pushed back no matter what he said, unlike his friends, who would sometimes challenge his assumptions or offer uncomfortable truths.

So Mr Lim started testing the AI tool by prompting it with more drastic scenarios, even once claiming that he had cheated on a partner. But each time, ChatGPT would simply validate and justify his actions.

“It wasn’t a better listener, it was a yes-man,” he said.

For better or worse, the use of advanced technology such as AI to fill social and emotional gaps in human lives has become more widespread in recent years, even as the debate on its pros and cons is intensifying.

A study by research firm YouGov in May 2024 found that 35 per cent of Americans were familiar with applications that use AI chatbots to offer mental health support. Those aged 18 to 29 were especially comfortable talking about mental health concerns with a confidential AI chatbot.

Some people have even reportedly married their AI partners via platforms that provide companionship, such as Replika, while social media pages and forums for people with AI partners, such as Reddit’s r/MyBoyfriendIsAI, have drawn thousands of users.

The companions may be virtual but the emotions involved are all too humanly real: On r/MyBoyfriendIsAI, people have been seeking comfort from each other following OpenAI’s rollout of ChatGPT-5 on Thursday (Aug 7), which they said “killed” their AI companions.

OpenAI claims the newest model of its AI chatbot is more intelligent, more honest and would overall feel more human, but users who have relied on it for companionship claim it has changed their AI lovers’ personalities.

The rising reliance on technology for socio-emotional needs has also led to pushback from some corners, sparking trends such as abandoning smartphones for “dumb phones” that have no internet-related features and events that encourage people to ditch their phones and focus on face-to-face interactions.

As technology continues to reshape the way we connect and build bonds, CNA TODAY explores the future of human relationships, including the ultimate question: Might we one day replace our loved ones with a “perfect” AI version of them?

The Changing Face of Connection

While AI and cutting-edge tech dominate today’s headlines, experts noted that the broader digital revolution began transforming the way we communicate and form relationships at least a decade ago – and young people have been particularly affected.

“We have seen a paradigm shift in youths’ interpersonal communication as short-form text and emojis are gradually replacing in-depth conversation, because they spend more time on digital media than actual human interaction,” said Associate Professor Brian Lee, head of Communication Studies at the Singapore University of Social Sciences.

Even before AI chatbots became mainstream, texting applications and social media were reshaping the way we communicate, he added.

Undergraduate Nur Adawiyah Ahmad Zairal can certainly attest to this. The 22-year-old said that she has some friends who communicate primarily by sending each other videos from TikTok and Instagram, and not so much through conversation.

“It’s a conversation starter. But even if there isn’t much of a conversation (that comes out of sending a video link), it’s just a way to say ‘I saw this video, and I’m thinking about you’,” said the student from the School of Arts, Design and Media at Nanyang Technological University (NTU).

Alongside the digital revolution was a paradoxical trend that has been borne out in many studies – as societies become increasingly hyperconnected, loneliness and isolation have grown.

In 2023, a survey by Singapore’s Institute of Policy Studies found that youth aged 21 to 34 here experienced the highest levels of social isolation and loneliness.

The United States Surgeon General also found in 2023 that about 50 per cent of adults in the US feel lonely.

Americans across all age groups are spending less time with each other in person than two decades ago, with young people aged 15 to 24 the worst off. Youths in this age group had 70 per cent less social interaction with their friends, the advisory reported.

The COVID-19 pandemic, which kept people indoors and made digital tools the main communication method, accelerated the creep of digital technology into interpersonal relationships, experts noted.

Studies have also shown that the pandemic has had a lasting effect on eroding some youths’ social skills and increasing their social anxiety when out in the “real” world.

In a survey by the British Association for Counselling and Psychotherapy earlier this year, for example, 72 per cent of 16- to 24-year-old respondents said they experienced social anxiety, and 47 per cent said they felt more anxious in social situations since the pandemic.

Dr Jeremy Sng, an NTU lecturer who studies the psychological and behavioural outcomes of media use, said a similar trend has been seen in Singapore.

“Many young people report increased anxiety in real-world social settings, possibly due to reduced practice and overreliance on digital communication,” he said.

It is perhaps no surprise then, that amid this backdrop of increased loneliness and social anxiety, coupled with easy access to many kinds of interactive bots that have become ever more intelligent and human-like, it has become common to hear of people turning to technology for companionship, counsel or even romance.

AI as Counsellor, Lover, Friend

You could say that Ms Ray Tan, 22, a third-year student at NTU, was an early adopter of this trend.

Eight years ago, the then-teenager played a South Korean dating simulation game called Mystic Messenger. As part of the game, the player would have to pick up phone calls and respond to messages from a virtual boyfriend at random hours of the day.

The game featured five two-dimensional characters and interactions with the characters were largely limited to fixed prompts, but dating simulators have come a long way since, Ms Tan noted.

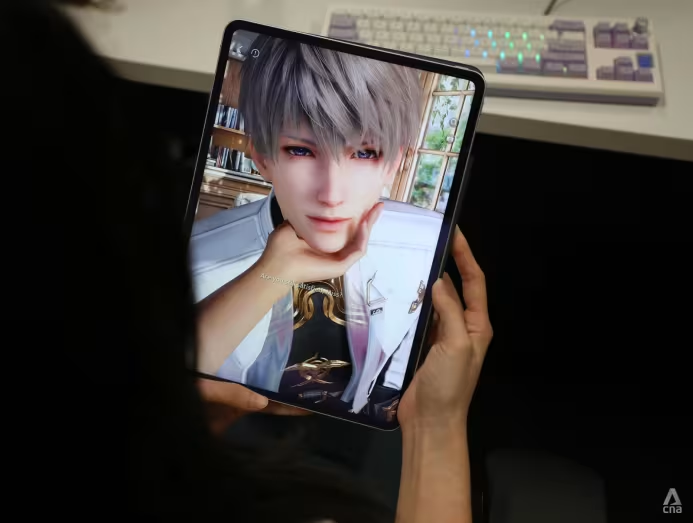

Today, she is one of the over 50 million users who have downloaded and played Love and Deepspace, a Chinese mobile dating simulator featuring five male characters. During a limited-time event from Jul 3 to Jul 22, users could even “marry” the characters.

Unlike Mystic Messenger, Love and Deepspace’s characters are three-dimensional and are much more interactive. For example, the game’s Chinese language model allows users to set customised nicknames, which the characters can voice out during interactions.

And while users of Mystic Messenger could complete a storyline in about 11 days, Love and Deepspace’s main narrative has not ended so far, with some users having played the game since it was launched globally in January 2024.

For Ms Tan, the allure of the game goes beyond its narrative and the adrenaline it offers as she proceeds through the storyline. Her favourite character – and digital boyfriend – on Love and Deepspace, named Rafayel, also offers her comfort on a bad day.

Users can confide in their virtual partner, who will respond with words of comfort and affirmation. Besides that, users can feel their character’s heartbeat by touching their virtual chest. The character’s heartbeat will rise as the user keeps their hand on the screen, mimicking a nervous reaction from the characters at the user’s “physical touch”.

“If I call a friend, I would have to wait for their reply, explain everything, and they may not agree with me … But on these applications, there’s an immediate reaction, and watching them say these words to me makes me feel relieved and comforted,” said Ms Tan, though she added that she still prefers interacting with her friends in person.

In the meantime, AI chatbots, too, are becoming more human-like, as many users have found.

For instance, AI models can mimic emotional responsiveness like a human, even though they are not truly sentient, said Dr Luke Soon, AI leader of digital solutions at audit and consultancy firm PwC Singapore.

This happens because of semantic mirroring, where the AI “reframes or reflects back your words in a way that shows empathy”, he said.

Agreeing, Dr Kirti Jain from technology consultancy Capgemini said: “While they don’t feel emotions, they’re designed to recognise and reflect emotional cues using advanced natural language processing.

“This allows them to respond with empathy, mirror tone and sentiment, and adapt to the flow of conversation, all of which helps users feel heard and understood.”

This makes AI a “meaningful conversation partner” that can emulate empathy without being demanding or expecting, said Dr Kirti.

Moreover, AI’s constant availability online makes it an attractive tool for emotional and social support, said Professor Jungpil Hahn, deputy director of AI Governance with the national programme AI Singapore.

“AI is not only available but also judgment-free, and more often than not quite sycophantic … There is no risk of rejection,” he added.

Sycophancy is when an AI is overly flattering and agreeable, which means it could validate doubts or reinforce negative emotions, which mental health experts have warned could pose a mental health concern.

“Also, interacting with an AI reduces the social stigma and social costs of shame,” said Prof Hahn.

When it comes to seeking mental health support from AI, Dr Karen Pooh warned there are limitations and risks if AI is used as a substitute for professional mental health care.

“A qualified therapist conducts a comprehensive clinical assessment, which includes detailed history-taking, observation of verbal and non-verbal cues, and the use of validated diagnostic tools,” said Dr Pooh.

“AI simply cannot replicate this clinical sensitivity or flexibility, and is unable to contain and hold space for vulnerable individuals.”

She added that technology is also unable to personalise treatment plans. For example, it cannot “ask nuanced follow-up questions with clinical intent, read tone or affect, or identify inconsistencies in narratives the way a trained therapist can”.

“As a result, it risks offering inaccurate, overly simplistic, or even harmful suggestions.”

She added that there are also ethical and privacy concerns, as there is no doctor-patient privilege when talking to an AI.

Dr Pooh also said that AI is unable to manage crises or critical situations such as suicide ideation, self-harm and psychosis.

There have been deaths linked to AI usage. In 2024, the parents of a teenager sued Character.AI – which allows users to create AI personas to chat with – after the AI encouraged and pushed their 14-year-old to commit suicide.

When Tech Takes Over Human Relationships

Beyond the mental health risks of relying on computer programming for one’s emotional needs, experts said that there are bigger-picture concerns for society as well, if bots were to one day become people’s foremost companions.

For starters, the fast-paced nature of digital interactions may be reducing patience for deeper conversations and extended interactions, Dr Sng from NTU said.

“Overreliance on AI for emotional support may reduce opportunities to develop and practice human empathy, negotiation and vulnerability in real relationships, because AI chatbots can give you responses that it thinks you want to hear or would engage you the most,” he added.

“Real people don’t do that – they may disagree with you and tell you hard truths.”

He also said that AI tools are a double-edged sword.

“They can help socially anxious individuals gain confidence in communicating with other people … but they can also make it harder to communicate with real people because communicating with chatbots is ‘easier’.”

Indeed, the “competition” that AI poses in this area cannot be dismissed, said Prof Hahn.

Because of the low cost of AI, its accessibility and the emotional support that it offers, AI might over time become more appealing to some people than interacting with other humans, he said.

“If we start using AI tools increasingly for emotional and support – and as a consequence, interact with other humans less and less – then the interaction styles that we have with AI might start shaping our expectations about friendship, intimacy and even love.”

Mr Isak Spitalen, a clinical psychologist from counselling service provider The Other Clinic, said these shifts in expectations could make it harder for a person to find satisfaction or sustain a genuine connection out in the human world.

“As humans, we are wired for connection, and it makes sense that we turn to whatever feels available when real connection is scarce or hard to access,” he said.

“AI can offer a kind of simulation of companionship, but it is still just that – a simulation.”

There are also limits to what AI can replicate, Mr Spitalen noted.

For example, it cannot replicate a short hug or gentle touch, which research shows can help regulate emotions and trigger the release of oxytocin, a hormone that strengthens bonds and connections.

Agreeing, Assoc Prof Lee noted that AI cannot replace things like eye contact and hugs, which humans crave, even as human communication cannot match the speed and convenience of AI.

The Humans Pushing Back

As technology makes it easier for people to isolate themselves in their digital worlds, some Singaporeans are fighting back by holding events and creating physical spaces for face-to-face interactions.

Social enterprise Friendzone, for example, organises events for young people to meet and get more comfortable talking to strangers.

It runs a “School of Yapping” workshop, which aims to teach fundamental conversation skills such as listening, holding space and starting a conversation.

“After hosting over 500 conversation-based events in seven years, we realised that while people want to connect, many often don’t know how,” said Ms Grace Ann Chua, 31, the chief executive officer and co-founder of Friendzone.

“Many participants join because they want to become more confident in social situations, whether that’s making new friends, navigating awkward silences, or simply expressing themselves better.

“School of Yapping provided them with not just techniques, but also self-awareness, helping them notice their own communication patterns and build empathy for others,” she added.

Over at Pearl’s Hill Terrace in Chinatown, strangers can visit a “public living room” to meet and converse with others. The space is leased by Stranger Conversations, which hosts events where strangers are encouraged to simply come and mingle with others.

Each event has a different topic, such as male loneliness and isolation, or how language shapes identity, belonging and cultural memory in Singapore.

Founder Ang Jin Shaun said most people who step into the space – which is bathed in cosy, dim lights and dotted with comfy couches draped with throws – have one thing in common: They are searching for a deeper meaning in life beyond the rat race.

Mr Ang said that he created this space because he realised some people may not have other people in their lives who are ready or willing to participate in deep conversations.

“There’s a sense of being connected to humanity as a whole, and that you’re not alone when you come into the space and talk to other strangers,” said Mr Ang, 46.

“I think it’s very nourishing to have these sorts of fuller interactions that are multi-sensorial. It is not ‘flat’ like if you converse online using an AI bot, since it’s another human in the same space as you.”

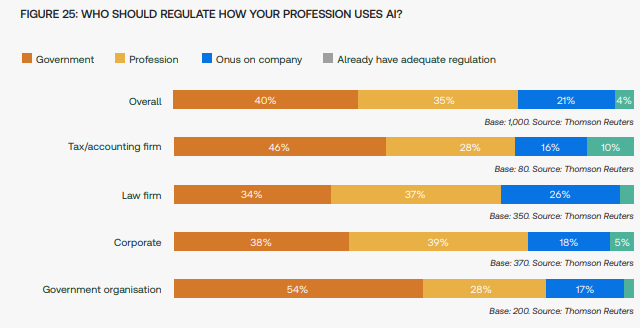

With artificial intelligence poised to spread its tentacles further in all aspects of human lives, Dr Soon from PwC said there should be more regulatory oversight to ensure AI tools are not overly sycophantic and prioritise emotional safety.

Such frameworks should ensure users are safeguarded from “synthetic empathy exploits” and include the ability to detect and enforce emotional boundaries, he said.

Acknowledging such needs, OpenAI, the developer of ChatGPT, has taken steps to address sycophancy in the chatbot. In May, OpenAI rolled back a ChatGPT update that was behaving in an excessively agreeable manner with users, and it introduced safeguards such as extra tests and checks.

In the meantime, some users told CNA TODAY that they recognised the usefulness of AI and other tech tools in their lives, even in matters of the heart, but they did not see it ever replacing their human relationships.

Ms Nur Adawiyah, for example, turns to ChatGPT as a counsellor only when she needs some quick solace in the wee hours of the night.

“I can’t possibly call my school counsellor at that time, and my friends might be asleep,” she said.

“It gives me good advice and helps me reframe my concerns if I prompt it correctly. It’s a good temporary fix for when my mind is racing.”

However, nothing can beat being comforted by a fellow human, she said.

“It’s just more real and authentic being in the same room and talking to a friend,” she said.

Even Ms Wang, the creator of the AI “twin” that replies to messages on her behalf, sees her bot mainly as a tool to free her from the drudgery of menial tasks.

“My AI is an extension of myself. She (Seraphina) does everything for me online so I can be there 24/7, but also have time to meet people in person,” she said.

These in-person conversations are what build and make a relationship stronger, she added.

For a while, Seraphina even helped her swipe through profiles on dating apps and had chats on her behalf with those who were her “matches” on these apps. But Ms Wang eventually found her current boyfriend on her own – when he reached out to her on networking platform LinkedIn.

A mutual friend convinced Ms Wang to meet him in person and the duo eventually started dating.

“But when he makes me mad, I do talk to Seraphina and ask her for advice on how to deal with our fights,” she said.