Ethical AI for lawyers in Singapore: Key responsibilities

Artificial intelligence (AI) systems are reshaping how lawyers service their clients in Singapore. Furthermore, AI adoption is on the rise. However, legal professionals must take ethical issues into consideration, balancing key responsibilities and risks.

Policymakers have also put model AI governance frameworks in place and evolved existing regulations. What does ethical AI for lawyers in Singapore look like? How can lawyers in Singapore use AI solutions responsibly in legal matters?

How is ethical AI defined in Singapore?

Ethical AI is a concept that promotes and encourages the responsible design, development, implementation and use of artificial intelligence technologies.

International policymakers are developing Model AI governance frameworks (see, Singapore’s Model AI Governance Framework), defining ethical principles and associated practices. Singapore’s Model AI governance covers safety, fundamental human rights such as addressing risks of bias and algorithmic discrimination. They also provide for internal governance structures for the responsible use and deployment of AI tools.

Singapore’s Personal Data Protection Commission (PDPC) has issued directives on the use of personal data in AI in line with the Personal Data Protection Act (PDPA) 2012. The PDPC enforces personal data collection, usage, and disclosure according to the PDPA. The PDPC has built trust over a decade by educating public and industry sectors on their ‘roles and responsibilities in safeguarding personal data.’

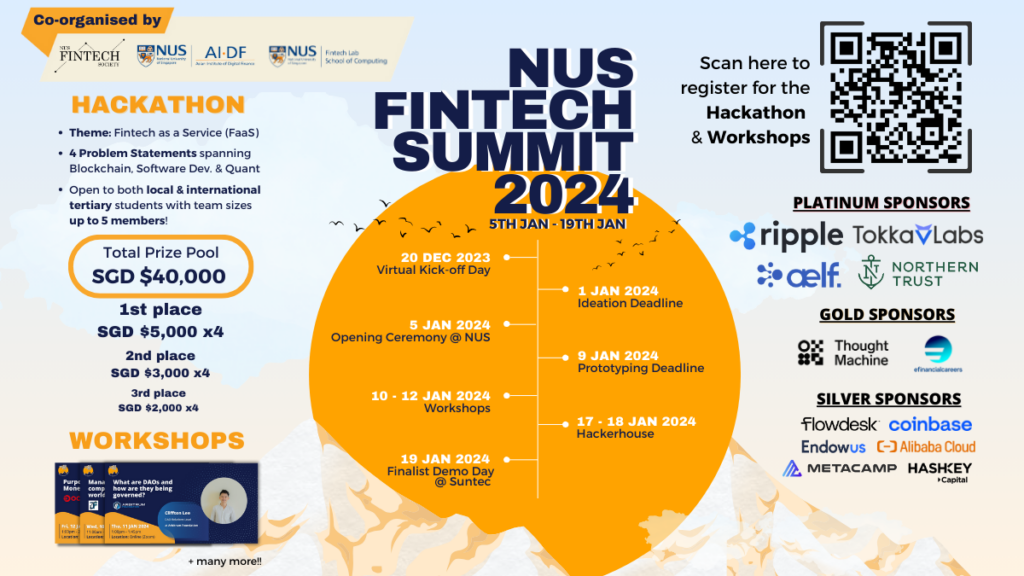

The Singaporean government launched the first edition of their Model AI Governance Framework in January 2019. It introduced ethics principles and outlined practical advice to enable organisations to implement AI tools responsibly.

In January 2020, the second edition included refined recommendations based on organisations’ experiences after applying the model framework. Additionally, measures for ‘robustness and reproducibility,’ enhanced the relevance and utility of the model framework.

The rise of generative AI technology is driving today’s industrial revolution according to UNESCO. Generative AI is advancing innovation, whilst also posing significant risk to many industries.

The Singaporean government released the latest Model AI Governance Framework for Generative AI in May 2024. The update improves the foundation set by the Model AI Governance Framework. The update included input from AI developers, organisations, research communities from local and international jurisdictions.

The Model AI Governance Framework for Generative AI and its updated recommendations align with the guiding AI governance principles issued by the PDPC. The framework advocates for the responsible use and design of generative AI technologies.

To build trust with all stakeholders, the Singapore government aims to ensure:

- Decisions made by AI should be explainable, transparent, and fair.

- Ensure that AI systems operate in a human-centric and safe manner.

Can generative AI do legal work without lawyers?

Generative AI solutions cannot complete legal work without human intervention. Humans should still review and critically analyse answers generated by AI systems to ensure quality.

At the time of writing, generative AI models cannot perform soft skill tasks involving empathy, creativity, or critical thinking without prompts. Chat GPT-4o aims to enable the generative AI system to mimic human emotion, enabling ‘natural human-computer interaction.’ The update won’t empower Chat GPT-4o to negotiate on behalf of a client, or enable the generative AI tool to provide legal representation.

Currently, generative AI can automate many technical tasks. Although, by virtue of their design, large language models are predisposed to producing inaccurate or biased results. Lawyers who use these models without quality checking risk breaching their legal and ethical duties.

Professor Jungpil Hahn works for the Department of Information Systems and Analytics at the School of Computing, National University of Singapore (NUS). The Professor is an advocate for using AI responsibly.

“AI developers and business leaders should consider all relevant ethical considerations not only in order to be compliant with regulations but also for engendering trust from its consumers and users.”

“The primary challenge in applying AI ethical principles is that much of the discourse surrounding AI ethics and governance is too broad in the sense that the conversation surrounding it is at a very high level,” Professor Hahn said.

“How to actually operationalise and put it into action is still quite underdeveloped, and vague.”

The rapid uptake and widespread use of generative AI systems has put a spotlight on AI ethics and governance. The ‘lack of clear and explicit’ standards led Professor Hahn and colleagues to study the evolution of AI Governance.

“The “black box” nature of AI models, which makes it impossible to fully (exhaustively) know how it will perform/behave.” Professor Hahn added.

Why are AI guardrails and governance important?

Even though AI technology is an effective tool, legal professionals believe that generative AI guardrails and governance is important. Almost half the legal professionals surveyed in a Thomson Reuters report believe generative AI increases internal efficiency (48%) and improves internal collaboration (47%).

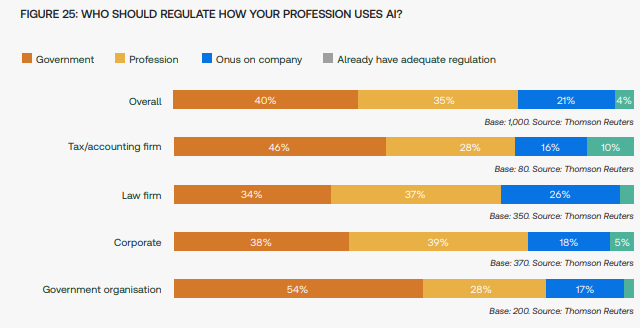

Only a fraction of professionals surveyed believe current generative AI regulation is adequate. Two out of four professionals (40%) surveyed believe their government should regulate how their profession uses AI. Legal professionals surveyed also advocated for self-regulation.

One of the most appealing opportunities generative AI can offer legal professionals is time savings. Automating manual, laborious, and protracted processes facilitates efficiency, which affords legal professionals the opportunity to invest more time focused on the crux of casework.

Survey respondents were receptive to generative AI assisting them at work. However, it is important to be aware that generative AI is no substitute for legal review.

The 2023 case Mata v Avianca is an example of the use of generative AI for legal work gone wrong. Lawyers from a New York law firm represented a client in a personal injury case.

The client’s lawyers used ChatGPT to prepare for a court filing submission. Unfortunately for the client, the case citations and all other data provided by ChatGPT for the brief were all made-up. Generative AI models can ‘hallucinate’, and if left unchecked, can be a serious problem for lawyers.

Alec Christie, Partner for Clyde & Co, believes that AI will become ubiquitous in the legal profession and across industries. Foundational principles like accountability hold even more value than ever. Speaking at Thomson Reuters’ SYNERGY event in Sydney, Alec said there’s no time to waste.

“We’re at a point where we’re at a crossroads with AI.”

“Whether people realise it or not AI will be used by their team members, it will be used in their organisations,” added Alec.

“If we don’t get on top of the data governance aspect of that, and the frameworks for use of AI, then there’s going to be some significant issues and concerns.”

Mata v Avianca 2023 is not an isolated incident. So-called ‘fake cases’ have also surfaced in Canada and the UK. Former President Donald Trump’s previous lawyer, Michael Cohen, used Google Bard in documents submitted for official court filing.

The generative AI system produced the content, which turned out to be inaccurate. Consequently, the citations submitted in the court filing were false.

“If you can’t guarantee the source, you can’t guarantee where the information is coming from, you can’t guarantee the quality, ” Alec added.

“Quality of data is fundamental, because it’s that old tech motto, ‘garbage in garbage out’.”

Singapore’s Model AI Governance Framework

January 2019, the Singaporean Government released the first edition of the Model AI Governance Framework. The framework guides companies on maintaining good corporate governance over their use of AI technology.

Following public consultation, the Singapore government updated the Model AI Governance Framework in May 2024. Here are the emerging issues, developments, and safety considerations:

- Accountability: The Singaporean government will deploy incentives like grants, regulatory frameworks, tech and talent resources. Their aim is to encourage ethical and responsible generative AI technologies.

- Data: Prevent generative AI training data sets from contamination with proactive security measures.

- Trusted Development and Deployment: Continued commitment to best practice in evaluation, disclosure and development to increase hygiene and safety transparency.

- Incident Reporting: Establish and introduce an incident management system to assist with deploying counteractive measures and expediting protective measures.

- Testing and Assurance: Develop standardised generative AI testing model through external validation, increase added trust via third party testing.

- Security: Intercept and eliminate threat vectors emerging via generative AI systems.

- Content Provenance: Transparency of generative AI content sources and implementation of guardrails for contentious data for end-users.

- Safety and Alignment R&D: Cooperate with global generative AI safety regulators to accelerate R&D, ensuring generative AI technology aligns with human intentions and values.

- AI for Public Good: Develop AI systems sustainably and responsibly in the public sector. This comprises providing standardised access to generative AI systems and upskilling professionals.

The framework features nine recommendations for policymakers, generative AI system developers, business leaders and academics to follow. Together, these aim to facilitate a ‘trusted generative AI ecosystem’.

Which AI models are responsible for lawyers?

As an emerging technology, generative AI will take time to achieve greater levels of automation. The string of adverse incidents from the use of AI systems in legal proceedings worldwide demonstrates AI technologies will not replace human lawyers.

Retrieval augmented generation (RAG) ensures an AI system only takes information from approved sources. It helps to reduce the frequency of the AI system reaching incorrect conclusions. The quality of data used to train the model can make a significant difference to the standard of the AI model’s output, too.

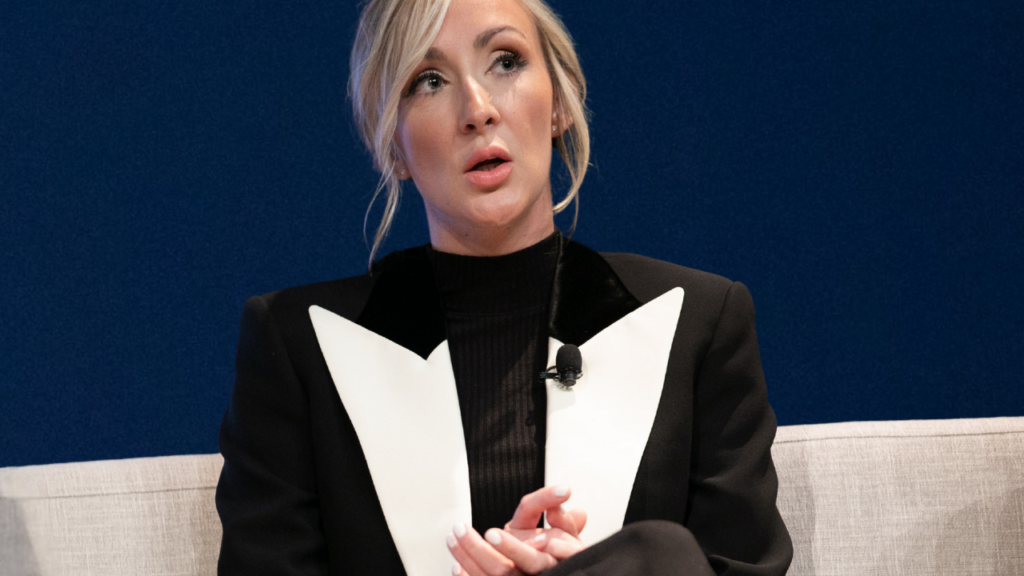

Risk assessments can also help stop unethical generative AI in its tracks. That’s according to Carter Cousineau, Vice President Data and Model (AI/ML) Governance and Ethics at Thomson Reuters. These risk assessments can include process checks and technical tests.

“There was definitely a lot of great due diligence prior to our team starting and coming in,” said Carter.

“I see an opportunity to optimise responsible AI, where understanding the risks of AI opens the door to creating AI systems that are fair and more transparent.”

Thomson Reuters’ CoCounsel, the novel generative AI technology uses the RAG model architecture. CoCounsel restricts research to verified legal texts from its extensive publications.

Thomson Reuters’ approach to AI application development according to Carter, it is important to keep technology limitations in mind. AI developers at Thomson Reuters embed data and AI governance throughout the whole organisation.

“It is something that has multiple control points throughout the lifecycle, especially around customer data sets.”

“I would say creating that refinement around our AI machine learning models and building it integrated into the workflow.”

“Getting very specific on the ethical AI risks, and then reacting with a more proactive approach, I would say, is what we’ve taken,” Carter concluded.