BrownBag Series Recap

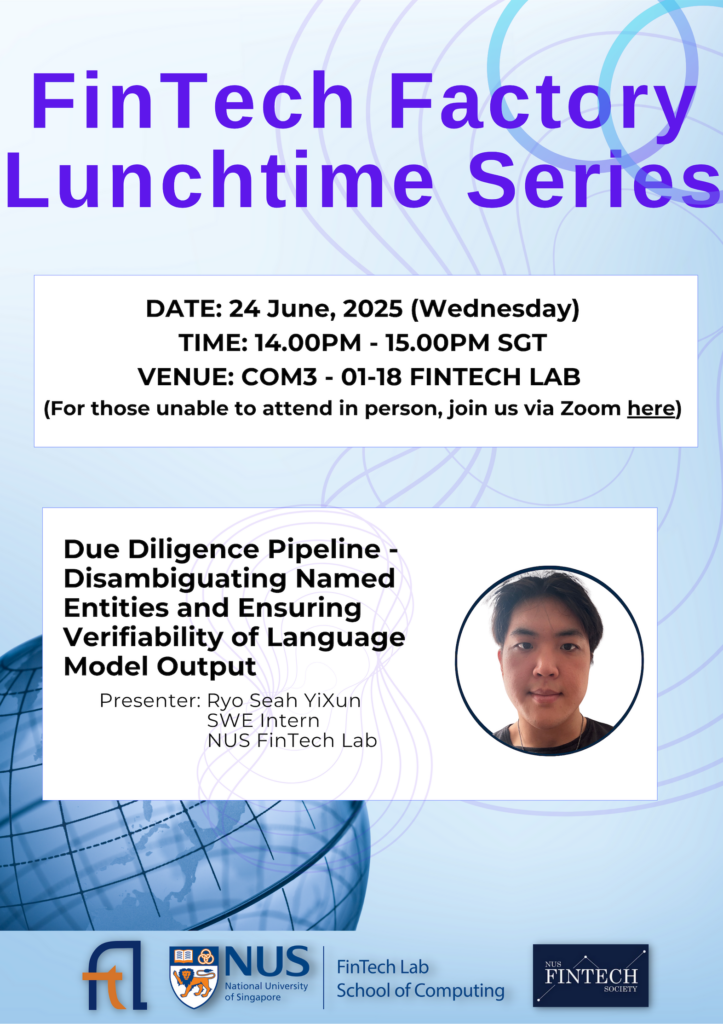

Date: 24 June, 2025

Speaker: Ryo Seah, Intern @ NUS FinTechLab

This BrownBag session focused on automating donor due diligence for philanthropic organizations, presenting a proof-of-concept OSINT pipeline. The discussion examined technical challenges including LLM hallucinations and clustering thresholds while highlighting solutions such as hierarchical clustering, source reliability scoring, and integration with CRM systems for scalable risk management.

An Automated Pipeline for Generating Open Source Intelligence Dossiers for Donor Vetting

Understanding the Problem

Philanthropic organizations face a complex challenge in ensuring comprehensive donor due diligence while managing reputational risks. Current workflows are often manual, fragmented, and time-consuming, requiring staffs to piece together unstructured data from scattered sources. To address these inefficiencies, the lab explored how intelligent automation—combining web scraping, large language models, and clustering techniques—can streamline the due diligence process and improve the accuracy and scalability of donor profiling.

Key Challenges

Two persistent challenges stood out:

- LLM Hallucinations – While LLMs are effective at structuring scraped data, they occasionally produce unsupported claims.

- Solution: Establishing a “chain of trust” by tying each fact directly to its original source.

- Ambiguous Profiles – Similar or identical names often led to merged donor records.

- Solution: Agglomerative clustering with semantic embeddings flagged potential overlaps for human review.

Other hurdles included API rate limits, blocked scraping on certain sites, and the scalability of processing larger name lists.

Technical Implementation

The demonstrated pipeline (Stage 1: OSINT Automation) follows a three-step workflow:

- Search: SerpAPI for Google results, Playwright for scraping dynamic content.

- Aggregate: LLMs structure data into clean key-value pairs, removing noise.

- Cluster: Embeddings identify duplicate or ambiguous profiles, generating YAML outputs for PDF dossiers.

This approach ensures traceability by linking every fact to its source, significantly reducing hallucination risks.

Research Questions & Discussion

The BrownBag session not only outlined the proof-of-concept pipeline but also raised critical questions from the internal audience, reflecting both technical and strategic considerations:

- How can the system be made more user-friendly, perhaps through a GUI interface with simple input fields rather than relying on command-line operations?

- What statistical approaches (e.g., elbow method) could refine clustering thresholds and improve the disambiguation of donor profiles?

- Can Named Entity Recognition (NER) be integrated to automatically filter out irrelevant paragraphs during web scraping?

- How should we design a source reliability scoring system that prioritizes information from more authoritative and trustworthy domains over less reliable sources?

- Would a saturation-based link collection approach, instead of a fixed link limit, improve coverage and accuracy of profiles?

Key Takeaways

- Proof of Concept: Successfully processed 5 names per batch with 70–80% scrape success, producing structured and verifiable YAML/PDF outputs.

- Audience Feedback: Raised crucial ideas for GUI development, clustering refinements, NER, and open-source LLM exploration.

- Future Roadmap: Enhancements include source reliability scoring, fuzzy matching across sources, and integration of risk indicators.

- Strategic Outlook: Beyond automation, the project aims to build a full Intelligence & Risk Engine to support long-term donor due diligence.

The session closed with a shared recognition: while automation cannot replace human oversight, it can significantly reduce repetitive effort, improve accuracy, and enable staff to focus on high-value decision-making.